QA LifeCycle & Software Testing Approach

IT industry follows the process in most aspects. It could be for the entire project, development, Software testing, management, etc. In Software Testing, Interview questions will be asked mainly on different life cycles – their phases, components, importance, etc. This article attempts to cover the life cycles followed in Software Testing. Also, a few test approaches that are commonly used are described.

1. Software Testing Life Cycle

This section covers the Software Testing life cycle and its phases. All the activities performed in it are explained in phases.

2. What is the Software Testing Life Cycle?

Software testing’s life cycle is the life cycle where each and every activity of testing is categorized into phases, and these phases are followed sequentially. This starts right from the beginning of the project, where requirements are analyzed, and ends at the close of software testing activities. Below are the testing phases followed in sequence to form the Software Testing Life Cycle.

- Requirement Analysis

- Test Planning / Test Strategy

- Test Design / Test Case Development

- Test Bed / Environment Setup

- Test Execution

- Test Closure

3. Explain the Requirement Analysis phase

The requirement analysis phase is the phase where the requirement specifications shared by the customer are thoroughly analyzed and understood. Here, testable and non-testable requirements are categorized, and a rough brainstorming session is carried out to determine the ambiguous and unclear requirements. These are documented and shared with the customer as a Query log to get confirmation on each point so that nothing is misunderstood and mistaken. Main Moto: DO NOT ASSUME.

4. Explain the Test Planning / Test Strategy phase

Manager / Lead defines test strategy. It determines a few factors like effort needed, scope, schedules/milestones, test approach, and cost estimates for the entire project. Based on the requirement analysis, the Test Plan will be designed. Test Plan is the living document that captures all software testing activities, scope to test, resource information, etc.

5. Explain the Test Design / Test Case Development phase

Detailed test case writing on testable requirements will be started by the testing team, along with preparing test data for execution. All the test cases undergo review either by customers or peers or lead to ensure good coverage for tests. The Requirement Traceability Matrix (RTM) will also be prepared to map each test case to a testable requirement. This helps to determine test coverage in terms of test cases.

Also, in some projects, test scenarios will be identified and documented in this phase instead of test cases. These will be very high, as to mention what the scene has to do. This requires highly skilled testers to develop test scenario documentation.

Test cases / Test Scenarios here will be written for the Smoke Test and Regression test 0

6. Explain the Test Bed / Environment Setup phase

The testbed will be set up with all the required hardware and software. Once the Test Environment is set up, the Smoke test cases will be executed to check the readiness of the test environment.

7. Explain the Test Execution phase

Once the smoke test passes, the software testing team will execute regression test cases. Passed test cases will be marked PASSED, and failed ones will be FAILED. FAILED test cases will have the defect or bug logged into the bug management tool. The FAILED Test case and the respective bugs will be linked. Along with these conditions, test cases may be marked CONDITIONAL PASSED when minor issues do not hamper the functionality. However, the issue should be logged into the bug management tool and linked to this test case. Any test cases that cannot be executed due to blocker issues will be marked BLOCKED, and the issue will be logged and linked to it. The test execution phase helps determine how well the feature is implemented with correctness.

8. Explain the Test Cycle Closure phase

Test Cycle will be closed based on criteria such as test coverage, quality, cost, time, business objectives, etc. This phase also conducts a Retrospective to evaluate what went well, what went bad, which area needs improvement, and lessons learned from the current cycle. Test metrics will be prepared to analyze how much the success rate deviates from the plan.

9. Bug Management

In Software Testing Interview, questions on bug management through its life cycle, components, severity, and priority are commonly asked and very important.

10. Explain the bug life cycle in Software Testing

Bug life cycle / Bug management is one of the most important activities followed by the entire team (developers, testers, leads, managers). The bug life cycle should be strictly followed in the projects as the bugs will always have the customer and stakeholders’ keen eye on them. Moving their timely status correctly helps decide the success of any component/project. A number of Open bugs will hamper the product’s quality and customers’ faith. Below is the life cycle of any bug the tester logs during software testing.

- When the tester finds the bug, it gets logged into the Bug Management tool with all the required information. The bug status will be NEW at this point.

- Once the bug is logged, it is reviewed by the test lead/manager and assigned to the development team. Bug status moves from NEW to ASSIGNED at this stage.

- The developer reviews the bug again, and if the bug is Valid, the Bug is opened, and the status moves from ASSIGNED to OPEN.

- Once the bug is fixed, the developer moves the bug status from ASSIGNED to FIXED. At this stage, the bug will get assigned to the tester who has logged it.

- The tester will then retest the fixed bug, and if it is working as expected, the status is marked as VERIFIED.

- Once the status moves to VERIFIED, the tester has to close the bug by again marking it with CLOSED status.

- If the bug is not fixed and the issue still exists, the tester re-opens it and assigns it back to the developer with all the information used for software testing the bug. Here, the status moves from FIXED to REOPEN.

- The developer reviews the bug, and if more information is needed to analyze it, the developer will move the bug status to NEED MORE INFO and assign it to Tester. A tester must then provide the required information and assign the bug back to a developer.

- The developer reviews the bug; if it is invalid, they will move the bug status to INVALID and close it.

- The developer reviews the bug, and if another tester logs it, it will move the status to DUPLICATE and close it.

- The developer reviews the bug, and if it can’t be fixed in the current release, it will be moved to DEFERRED after discussion with managers. This means the bug will be fixed in future releases.

11. What are the different components of the bug, and explain them

While logging a bug, the tester must provide much information. The bug management tool has fields where the tester has to provide information to it while logging the bug. Below are the components, in general, in any bug getting logged:

- Summary: Short title for a bug that summarizes what is happening. It should be catchy and clear. Just by reading the summary, anyone should understand what the issue is.

- Description: Detailed steps to reproduce the bug. Here, the steps start by launching the application until the bug is encountered. All the steps should be clearly described with input data wherever needed.

- Actual Result: What is happening? Here, the issue should be clearly described along with the analysis made. Also, giving the page URL where this bug is seen is always good.

- Expected Result: What should happen? Here, the expected behavior should be described correctly. References to the relevant requirement should be provided

- Component: Which component does the bug belong to? Select the correct feature that the bug belongs to. A bug gets assigned to the developer working on a particular feature.

- Severity: Level of the impact of the bug – Critical, Major, Normal, Minor

- Priority: Urgency to fix the bug – Critical, High, Medium, Low

- Release info: Release name. This is the release name of the application. It depends on the project naming the release (for example, App 1.0, Maintenance 1.0, etc.)

- Build info: The current build number is in the release. This indicates the build in which the bug is found.

- Environment: Testing environment name (testing, staging, prod, etc.). Testing happens in most of the environments. This indicates the software testing environment in which the bug is found.

- Operating System: OS version of the machine where a bug is found

- Browsers: All browser bugs are encountered where the bug is found. (usually, one or two browsers will be provided unless cross-browser comes into the picture)

- Attachment: Screenshot or video recording of the bug. The attachment should indicate the area of the bug. Highlight all possible information that should be noted in the screenshots/video.

12. Explain different Severity Levels that the bug could have

- Critical / Show Stopper (S1): Bug that hampers critical functionality and stops the user from proceeding further with any actions. The user is blocked from performing any actions on the particular feature or application. Example: Submissions are not working at all, 404 errors, etc.

- Major or Severe (S2): A bug misbehaving and producing wrong results goes into this category. Here, the feature deviates completely from the requirement. Examples: Wrong calculations, incorrect logic, no results for valid inputs, many results for invalid inputs, log in with no checks, broken links, etc.

- Moderate/ Normal (S3): Bug that indirectly harms the functionality. Here, the hampered functionality can still be tested in other ways to produce correct results. Examples: Instant updates in pages upon data input, broken images, but still working upon clicks, etc.

- Low or Minor (S4): Bug that does not impact the functionality. UI issues, alignments, spellings, grammar. Example: Logo misplaced, widget borders overlapped or crossing page borders, etc.

13. Explain different Priority Levels that the bug could have

- Priority 1 Critical (P1): The bug has to be fixed immediately, within a few hours of logging it.

- Priority 2 High (P2): Once the P1 bugs are fixed, P2 bugs are considered. These have to be fixed within 24-48 hours of logging it.

- Priority 3 Medium (P3): Once the P2 bugs are fixed, P3 bugs are considered. These must be fixed within 2-4 days of logging it or within a week.

- Once the P3 bugs are fixed, P4 bugs are taken into consideration. These can be fixed in the next builds or next releases. Bugs that do not hamper functionalities and are not so visible to the user are considered fixed in this priority.

14. Test approaches

In the Software Testing Interview, questions will be asked on which approach is best for the testing and what factors to consider before deciding. The approach to software testing a system/product/application is difficult to decide as it involves many criteria. A few of them are as follows:

- Schedule

- Budget constraints

- Skilled tester to adapt to any approach

- The complexity of the system/product/application

- Expectations of the revenue returned

Software Testing Interview questions will be based on testing phases in any model. Here, we will go through common approaches that focus on testing activities to a very high extent. The approaches below can be tweaked to the project’s comfort but keep the main process intact.

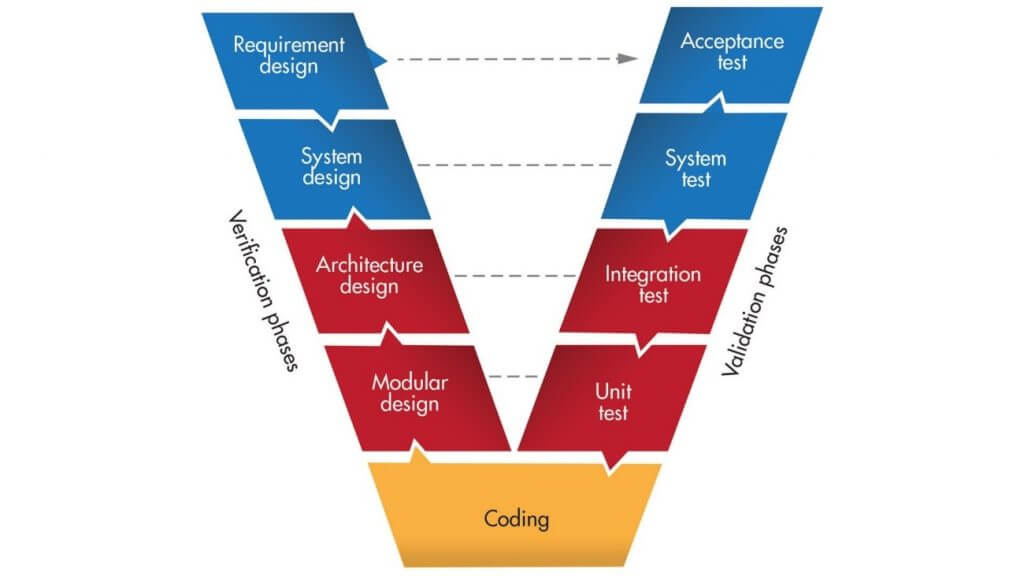

15. Explain the testing phases in the V-model

V-model is the model where development and testing go hand-in-hand. All the development activities will have relevant testing activities bound to them. The v-model has been proven to be one of the approaches to eliminating bugs entering the source code, and many bugs are fixed at the early stage of development. Below is how the model works:

- In the Requirements Study phase, testing is performed to identify and design User Acceptance Test Scenarios / Cases.

- During the system design phase, testing activities are performed to identify and design system test scenarios/cases.

- At the Architecture / High-level design, testing activity is performed to identify and design Integration Test Scenarios / Cases.

- At the Module / Low-level design, testing activity to perform and design Unit Test Scenarios / Cases are performed.

- After implementation, each level’s test scenarios/cases are executed at the stages.

Test Scenarios / Cases designed at particular stages are used in execution at their relevant phases.

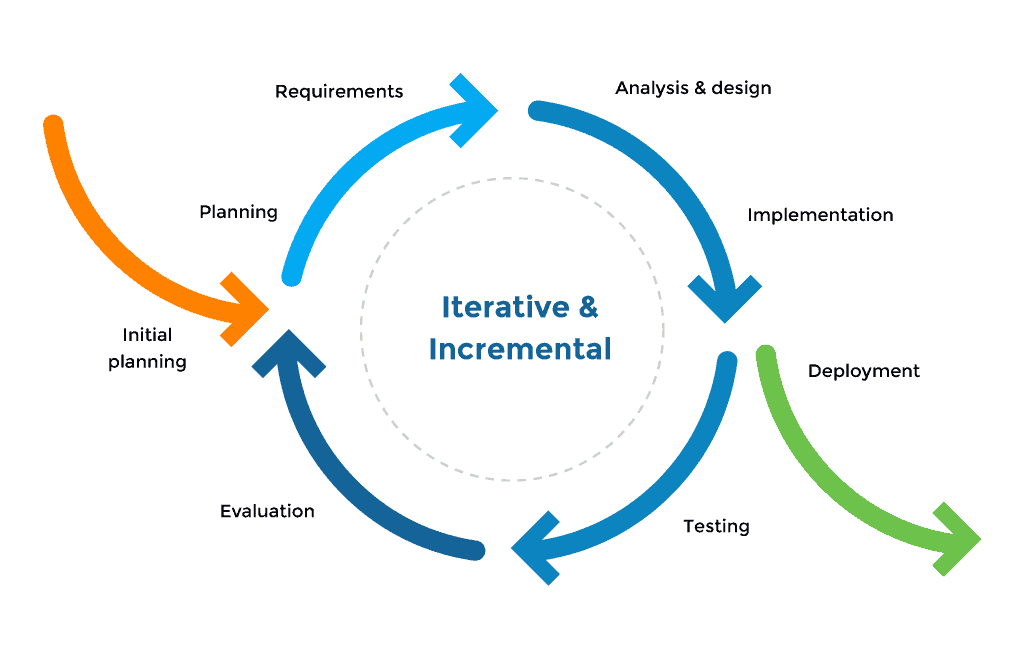

Explain the Iterative model.

Iteration is the model or approach where the new features developed are added to the existing system. Once the new features are added, the entire system is tested for existing and new functionalities. Regression testing is extensively performed in this approach to maintain the quality of the whole system. The major goal here is to ensure that all the functionalities work fine before adding the new feature and that they still work correctly after adding them. Also, it involves modifications to existing features and changing requirements (CRs).

Each release is divided into iterations, and each iteration integrates new features into the existing system and modifies the existing features. As and when the iterations increase, the testing effort also increases tremendously to ensure the quality of the whole system. Automation is preferred for an existing feature’s regression; a new feature / modified feature is tested manually.

Explain Agile Scrum

Agile Scrum is one of the most commonly followed approaches in the IT industry. Customers who expect quick returns from their investments go for this approach. The release timelines are too short, usually one release per 2-3 months. Each release has fewer new features and/or modifications to the existing features. The standard process of this approach takes variations from project to project, i.e., the same approach is followed in different ways in different projects. Here, the team size will be less than in other approaches, and the team is usually cross-skilled. Business analysts, developers, testers, managers, and customers form a single team. Customer interaction is very high in the Agile Scrum, and much documentation is not done. The common process goes as follows:

- Each release is divided into sprints, which are of 2 weeks each. Within these 2 weeks, the below activities are performed.

- Business analysts collect requirements from customers, customers’ customers, backlog issues, etc. Each requirement gets logged into the test management tool as Story, and expectations are called Acceptance Criteria.

- Each of the stories is described to the entire team as JAD sessions. Here, developers and testers commonly discuss and decide which functionalities can be implemented and tested. The effort required from both sides is provided at a very high level.

- Stories are selected and finalized for which sprints they have to be taken.

- Developers proceed with designing and implementing the features. Testers identify the test scenarios for the features per the acceptance criteria. Smoke test and regression test scenarios are documented and reviewed by peers, leads, business analysts, and/or customers.

- Once the build is ready for testing, testers perform the smoke test for each of the stories implemented and will report blockers, if any. After passing the smoke test, testers will perform regression testing for stories and impacted features.

- Any issues found are logged into the bug management tool, and developers quickly act on them.

- Upon completion of regression testing and bug verification for the fixed ones, testers will report the success % for each story and develop the retrospective document for the sprint. This document will have what went well in the current sprint, what went bad, and what could have been better.

- Retrospective points are discussed within the team, and corrective measures are taken to enhance the process further.

- Each story is given points at the end of the sprint based on effort, success, failure, open bugs, and fixed bugs.

- Any unfixed bug will move to the next sprint.

- Along with these activities, a scrum meeting is held daily by the Scrum master (usually the manager), where every team member has to update on their completed and current tasks, issues, risks, and dependencies. The Scrum master collects all the points from the entire team and coordinates with customers and/or teams to resolve issues then and there.

This common process varies from project to project. This approach guarantees the quality of the product if followed strictly, as there is a lack of documentation and tracking. The team has to be self-responsible for the tasks being carried out and should maintain accountability for themselves.